Running DeepSeek inside IntelliJ for Maximum Productivity

Did you know you can use third-party AI models inside IntelliJ?

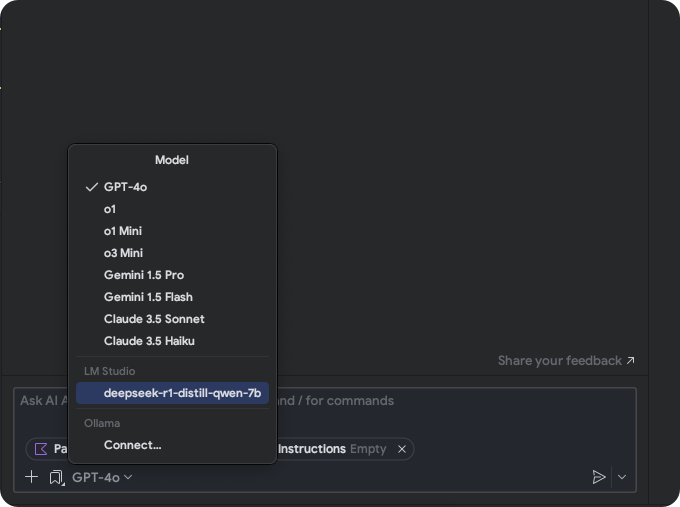

JetBrains provides an AI assistant for developers with several cloud-based models (pricing here), and these are:

- GPT-4o

- o1, o1 Mini, o3 Mini

- Gemini 1.5 Pro, Gemini 1.5 Flash

- Claude 3.5 Sonnet, Claude 3.5 Haiku

But if you want to run a model that is not included in the previous list, you can do so with zero dependency on cloud services. In our case we want to integaret you can integrate DeepSeek R1 Distill Qwen-7B locally fto use it for generating code, code documentation, explaining the code, etc.

Why DeepSeek R1 Distill Qwen-7B?

Here is a comparison between the best two models at writing code:

| Metric | DeepSeek R1 (7B) | Claude 3.5 Haiku (Cloud) |

|---|---|---|

| LiveCodeBench (Pass@1-COT) | 65.9 | 33.8 |

| SWE Verified (Resolved) | 49.2 | 50.8 |

| Data Processing | Fully local | Cloud-dependent |

| Cost | $0 | $0.25/million tokens |

Source: deepseek-ai/DeepSeek-R1-Distill-Qwen-7B on Hugging Face

What is “SWE Verified (Resolved)”? This metric reflects how well models resolve real-world software tasks from SWE-bench, a benchmark that tests AI systems on fixing GitHub issues in projects like Django and scikit-learn. “Resolved” means the AI-generated code fully addressed the issue and passed all tests.Check SWE Bench and Hugging Face SWE Bench.

Alright, let’s proceed with the setup! 🚀

Running DeepSeek Locally with LM Studio

To use DeepSeek inside IntelliJ, we’ll set it up via LM Studio.🚀

Step 1: Install LM Studio

Download and install LM Studio from the official site.

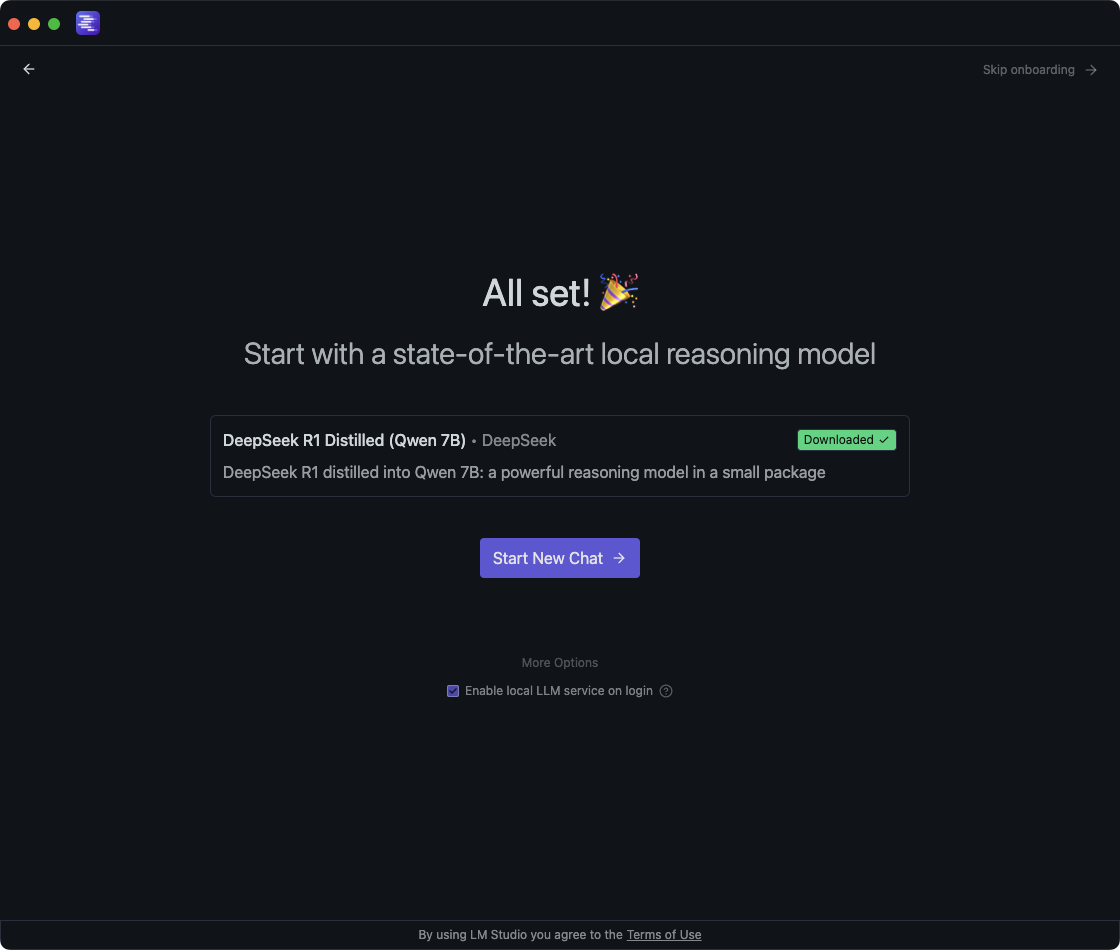

Step 2: Download the DeepSeek Model

Inside LM Studio:

- Pick a DeepSeek R1 Distill Qwen-7B model and check your system’s RAM and memory capacity.

- Download and wait for it to finish.

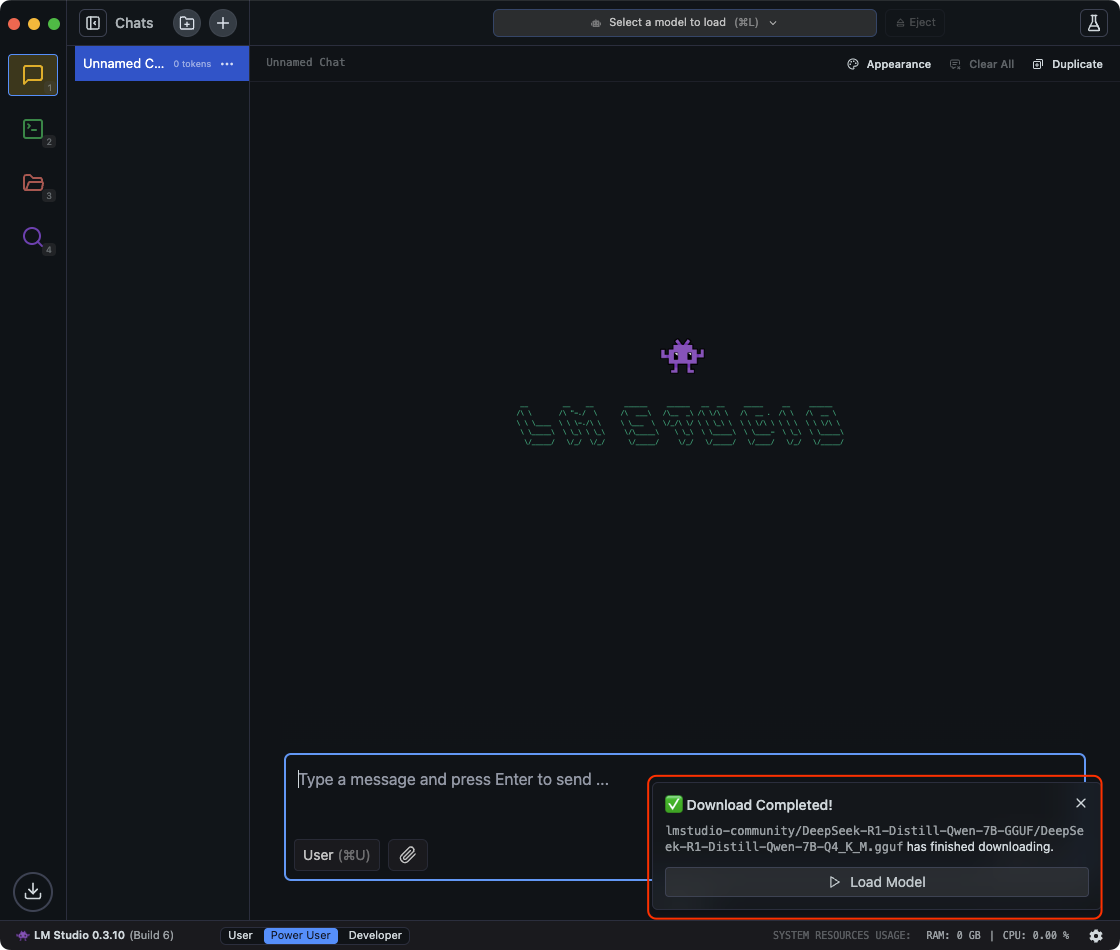

Step 3: Load the Model

- Once downloaded, it will appear in LM Studio.

- Click “Load Model” to activate it.

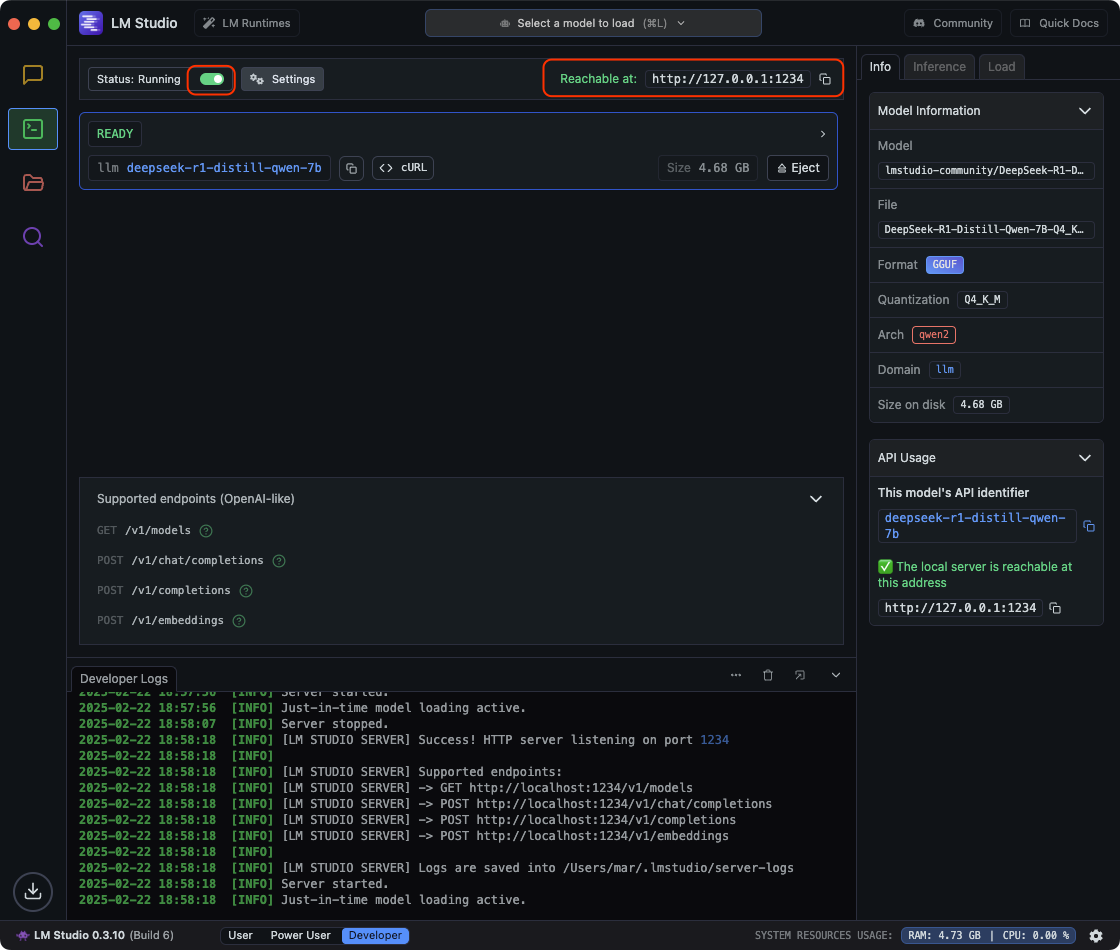

Step 4: Start the Local Server

- Go to the Developer tab in LM Studio.

- Start the local server and copy the generated localhost URL.

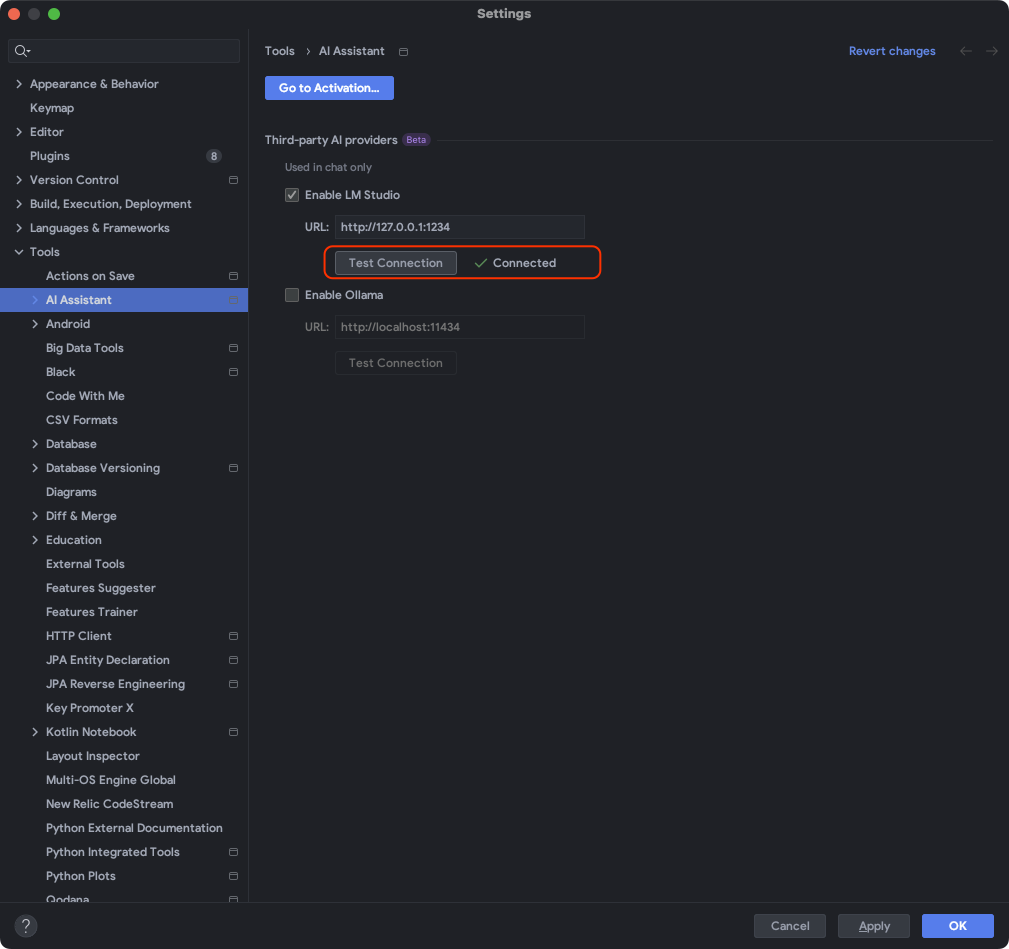

Step 5: Configure IntelliJ AI Assistant

- Open IntelliJ settings.

- Navigate to AI Assistant > Local Models.

- Paste the localhost URL from LM Studio.

- Test the connection.

- Save the configuration.

Step 6: Use AI Features in IntelliJ

Once set up, you can use DeepSeek for:

- Code completions.

- Code refactoring and enhancements.

- Context-aware suggestions

- Explaining the code and documentation

Supported Projects

DeepSeek works across all major frameworks supported by IntelliJ, including:

- Java, Kotlin, Android

- Python, Go

- Dart, Flutter, and more

Final Thoughts

With DeepSeek running locally, you get AI assistance without latency or cloud restrictions. Time to fix bugs and ship features faster!